“I grew up in Indiana,” writes Chris Huntington, “and saved a few thousand comic books in white boxes for the son I would have someday. . . . Despite my good intentions, we had to leave the boxes of yellowing comics behind when we moved to China.”

I grew up in Pennsylvania and only moved down to Virginia, so I still have one dented box of my childhood comics to share with my son. He pulled it down from the attic last weekend.

“I forgot how much fun these are,” he said.

Cameron is twelve and has lived all those years in our southern smallville of a town. Chris Huntington’s son, Dagim, is younger and born in Ethiopia. Huntington laments in “A Superhero Who Looks Like My Son”(a recent post at the New York Times parenting blog, Motherlode) how Dagin stopped wearing his Superman cape after he noticed how much darker his skin looked next to his adoptive parents’.

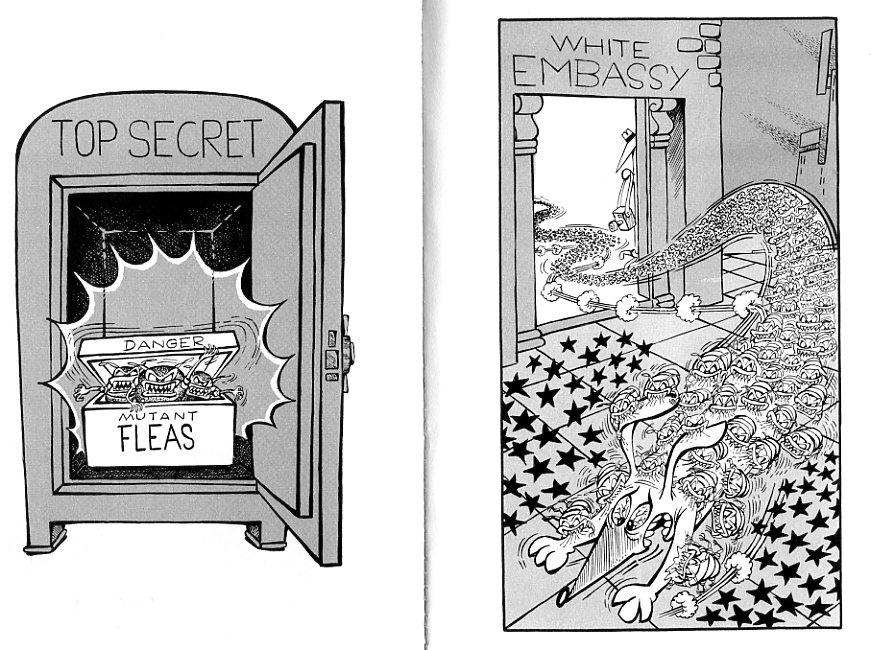

Cameron can flip to any page in my bin of comics and admire one of those “big-jawed white guys” Huntington and I grew up on. Dagim can’t. That, argues Junot Diaz, is the formula for a supervillain: “If you want to make a human being into a monster, deny them, at the cultural level, any reflection of themselves.” Fortunately, reports Huntington, Marvel swooped to the rescue with a black-Hispanic Spider-Man in 2011, giving Dagim a superhero to dress as two Halloweens running.

Glenn Beck called Ultimate Spider-Man just “a stupid comic book,” blaming the facelift on Michelle Obama and her assault on American traditions. But Financial Times saw the new interracial character as the continuing embodiment of America: “Spider-Man is the pure dream: the American heart, in the act of growing up and learning its path.” I happily side with Financial Times, though the odd thing about their opinion (aside from the fact that something called Financial Times HAS an opinion about a black-Hispanic Spider-Man) is the “growing up” bit.

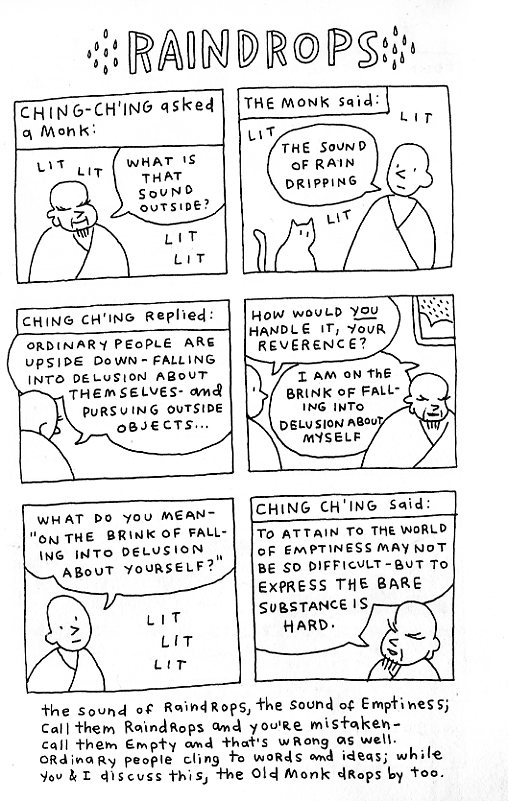

Peter Parker was a fifteen-year-old high schooler when that radioactive spider sunk its fangs into his adolescent body. Instant puberty metaphor. “What’s happening to me? I feel—different! As though my entire body is charged with some sort of fantastic energy!” I remember the feeling.

It was 1962. Stan Lee’s publisher didn’t want a teenage superhero. The recently reborn genre was still learning its path. Teenagers could only be sidekicks. The 1940s swarmed with Robin knock-offs, but none of them ever got to grow-up, to become adult heroes, to become adult anythings.

Captain Marvel’s little alter ego Billy Baston never aged. None of the Golden Agers did. Their origin stories moved with them through time. Bruce Wayne always witnessed his parents’ murder “Some fifteen years ago.” He never grew past it. For Billy and Robin, that meant never growing at all. They were marooned in puberty.

Stan Lee tried to change that. Peter Parker graduated high school in 1965, right on time. He starts college the same year. The bookworm scholarship boy was on track for a 1969 B.A.

But things don’t always go as planned. Co-creator Steve Ditko left the series a few issues later (#38, on stands the month I was born). Lee scripted plots with artist John Romita until 1972, when Lee took over his uncle’s job as publisher. He was all grown-up.

Peter doesn’t make it to his next graduation day till 1978. If I remember correctly (I haven’t read Amazing Spider-Man #185 since I bought it from a 7-EIeven comic book rack for “Still Only Thirty-five” cents when I was twelve), he missed a P.E. credit and had to wait for his diploma. Thirteen years as an undergraduate is a purgatorial span of time. (I’m an English professor now, so trust me, I know.)

Except it isn’t thirteen years. That’s no thirty-two-year-old in the cap and gown on the cover. Bodies age differently inside comic books. Peter’s still a young twentysomething. His first twenty-eight issues spanned less than three years, same for us out here in the real world. But during the next 150, things grind out of sync.

It’s not just that Peter’s clock moves more slowly. His life is marked by the same external events as ours. While he was attending Empire State University, Presidents Johnson, Nixon, Ford and Carter appeared multiple times in the Marvel universe. Their four-year terms came and went, but not Peter’s four-year college program. How can “the American heart” learn its path when it’s in a state of arrested development?

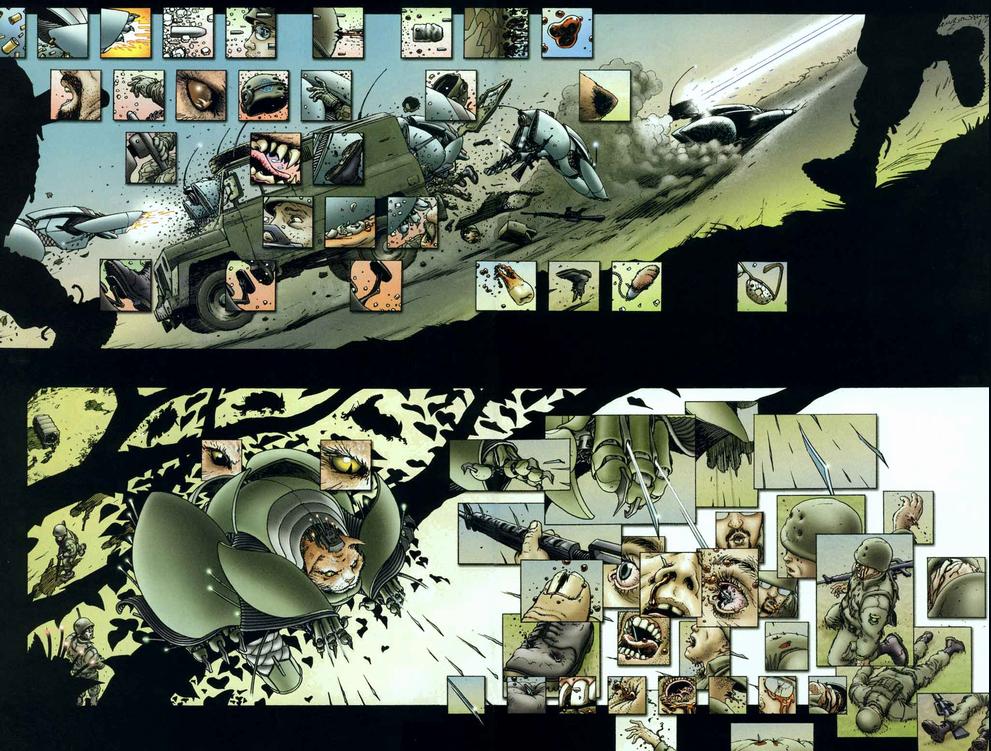

Slowing time wasn’t enough either. Marvel wanted to reverse the aging process. They wanted the original teen superhero to be a teenager again. When their 1998 reboot didn’t take hold (John Byrne had better luck turning back the Man of Steel’s clock), Marvel invented an entire universe. When Ultimate Spider-Man premiered in 2000, the new Peter Parker is fifteen again. And he was going to stay that way for a good long while. Writer Brian Bendis took seven issues to cover the events Lee and Ditko told in eleven pages.

But even with slo-mo pacing, Peter turned sixteen again in 2011. So after a half century of webslinging, Marvel took a more extreme countermeasure to unwanted aging. They killed him. But only because they had the still younger Spider-Man waiting in the wings. Once an adolescent, always an adolescent.

The newest Spider-Man, Miles Morales, started at thirteen. What my son turns next month. He and Miles will start shaving in a couple years. If Miles isn’t in the habit of rubbing deodorant in his armpits regularly, someone will have to suggest it. I’m sure he has cringed through a number of Sex Ed lessons inflicted by well-meaning but clueless P.E. teachers. My Health classes were always divided, mortified boys in one room, mortified girls across the hall. My kids’ schools follow the same regime. Some things don’t change.

Miles doesn’t live in Marvel’s main continuity, so who knows if he’ll make it out of adolescence alive. His predecessor died a virgin. Ultimate Peter and Mary Jane had talked about sex, but decided to wait. Sixteen, even five years of sixteen, is awfully young. Did I mention my daughter turned sixteen last spring?

Peter didn’t die alone though. Mary Jane knew his secret. I grew up with and continue a policy of open bedrooms while opposite sex friends are in the house, but Peter told her while they sat alone on his bed, Aunt May off who knows where. The scene lasted six pages, which is serious superhero stamina. It’s mostly close-ups, then Peter springing into the air and sticking to the wall as Mary Jane’s eye get real real big. Way better than my first time. It’s also quite sweet, the trust and friendship between them. For a superhero, for a pubescent superhero especially, unmasking is better than sex. It’s almost enough to make me wish I could reboot my own teen purgatory. Almost.

Meanwhile the Marvel universes continue to lurch in and out of time, every character ageless and aging, part of and not part of their readers’ worlds. It’s a fate not even Stan Lee could save them from. Cameron and Dagim will continue reading comic books, and then they’ll outgrow them, and then, who knows, maybe that box will get handed to a prepubescent grandson or granddaughter.

The now fifty-one-year-old Spider-Man, however, will continue not to grow up. But he will continue to change. “Maybe sooner or later,” suggests artist Sara Pichelli, “a black or gay — or both — hero will be considered something absolutely normal.” Spider-Man actor Andrew Garfield would like his character to be bisexual, a notion Stan Lee rejects (“I figure one sex is enough for anybody”). But anything’s possible. That’s what Huntington learned from superheroes, the quintessentially American lesson he wants to pass on to his son growing up in Singapore.

May that stupid American heart never stop finding its path.