Dear Centre for the Study of Existential Risk,

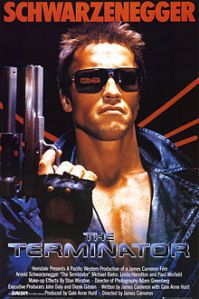

It’s rare to find folks willing to look sillier than me (an English professor who takes seriously the study of superheroes). Your hosting institution (Cambridge) dwarfs my tiny liberal arts college, and your collective degrees (Philosophy, Cosmology & Astrophysics, Theoretical Physics) and CV (dozens of books, hundreds of essays, and, oh yeah, Skype) makes me look like an under-achieving high schooler—which I was when the scifi classic The Terminator was released in 1984.

And yet it’s you, not me, taking James Cameron’s robot holocaust seriously. Or, as you urge: “stop treating intelligent machines as the stuff of science fiction, and start thinking of them as a part of the reality that we or our descendants may actually confront.”

So, to clarify, by “existential risk,” you don’t mean the soul-wrenching ennui kind. We’re talking the extinction of the human race. So Bravo. With all the press drones are getting lately, those hovering Skynet bombers don’t look so farfetched anymore.

Your website went online this winter, and to help the cause, I enlisted my book club to peruse the introductory links of articles and lectures on your “Resources & reading” page. It’s good stuff, but I think you should expand the list a bit. It’s all written from the 21st century. And yet the century you seem most aligned with is the 19th.

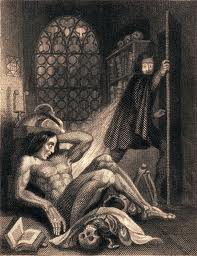

I know, barring some steampunk time travel plot, it’s unlikely the Victorians are going to invent the Matrix. But reading your admonitory essays, I sense you’ve set the controls on your own time machine in the wrong direction. It was H.G. Wells who warned in 1891 of the “Coming Beast,” “some now humble creature” that “Nature is, in unsuspected obscurity, equipping . . . with wider possibilities of appetite, endurance, or destruction, to rise in the fullness of time and sweep homo away.” Your stuff of science fiction isn’t William Gibson’s but Mary Shelley’s. The author of Frankenstein warned in 1818 that “a race of devils would be propagated upon the earth, who might make the very existence of the species of man a condition precarious and full of terror.”

Although today’s lowly machines pose no real competitive threat (it’s still easier to teach my sixteen-year-old daughter how to drive a car), your A.-I.-dominated future simmers with similar anxiety: “Would we be humans surviving (or not) in an environment in which superior machine intelligences had taken the reins, to speak?” As early as 2030, you prophesize “life as we know it getting replaced by more advanced life,” asking whether we should view “the future beings as our descendants or our conquerors.”

Either answer is a product of the same, oddly applied paradigm: Evolution.

Why do you talk about technology as a species?

Darwin quietly co-authors much of your analysis: “we risk yielding control over the planet to intelligences that are simply indifferent to us . . . just ask gorillas how it feels to compete for resources with the most intelligent species – the reason they are going extinct is not (on the whole) because humans are actively hostile towards them, but because we control the environment in ways that are detrimental to their continuing survival.”Natural selection is an allegory, yet you posit literally that our “most powerful 21st-century technologies – robotics, genetic engineering, and nanotech – are threatening to make humans an endangered species.”

I’m not arguing that these technologies are not as potentially harmful as you suggest. But talking about those potentials in Darwinistic terms (while viscerally effective) drags some unintended and unacknowledged baggage into the conversation. To express your fears, you stumble into the rhetoric of miscegenation and eugenics.

To borrow a postcolonial term, you talk about A.I. as if it’s a racial other, the nonhuman flipside of your us-them dichotomy. You worry “how we can best coexist with them,” alarmed because there’s “no reason to think that intelligent machines would share our values.” You describe technological enhancement as a slippery slope that could jeopardize human purity. You present the possibility that we are “going to become robots or fuse with robots.” Our seemingly harmless smartphones could lead to smart glasses and then brain implants, ending with humans “merging with super-intelligent computers.” Moreover, “Even if we humans nominally merge with such machines, we might have no guarantees whatsoever about the ultimate outcome, making it feel less like a merger and more like a hostile corporate takeover.” As result, “our humanity may well be lost.”

In other words, those dirty, mudblood cyborgs want to destroy our way of life.

Once we allow machines to fornicate with our women, their half-breed offspring could become “in some sense entirely posthuman.” Even if they think of themselves “as descendants of humans,” these new robo-mongrels may not share our goals (“love, happiness”) and may look down at us as indifferently as we regard “bugs on the windscreen.”

“Posthuman” sounds futuristic, but it’s another 19th century throwback. Before George Bernard Shaw rendered “Ubermensch” as “Superman,” Nietzsche’s first translator went with “beyond-man.” “Posthuman” is an equally apt fit.

When you warn us not to fall victim to the “comforting” thought that these future species will be “just like us, but smarter,” do you know you’re paraphrasing Shaw? He declared in 1903 that “contemporary Man” will “make no objection to the production of a race of [Supermen], because he will imagine them, not as true Supermen, but as himself endowed with infinite brains.” Shaw, like you, argued that the Superman will not share our human values: he “will snap his superfingers at all Man’s present trumpery ideals of right, duty, honor, justice, religion, even decency, and accept moral obligations beyond present human endurance.”

Shaw, oddly, thought this was a good thing. He, like Wells, believed in scientific breeding, the brave new thing that, like the fledgling technologies you envision, promised to transform the human race into something superior. It didn’t. But Nazi Germany gave it their best shot.

You quote the wrong line from Nietzsche (“The truth that science seeks can certainly be considered a dangerous substitute for God if it is likely to lead to our extinction”). Add Also Spake Zarathustra to your “Resources & reading” instead. Zarathustra advocates for the future you most fear, one in which “Man is something that is to be surpassed,” and so we bring about our end by creating the race that replaces us. “What is the ape to man?” asks Zarathustra, “A laughing-stock, a thing of shame. And just the same shall man be to the Superman: a laughing-stock, a thing of shame.”

Sounds like an existential risk to me.

And that’s the problem. In an attempt to map our future, you’re stumbling down the abandoned ant trails of our ugliest pasts. I think we can agree it’s a bad thing to accidentally conjure the specters of scientific racism and Adolf Hitler, but if your concerns are right, the problem is significantly bigger. We’re barreling blindly into territory that needs to be charted. So, yes, please start charting, but remember, the more your 21st century resembles the 19th, the more likely you’re getting everything wrong.